Can SEO pre-render Blazor WebAssembly apps? Is it possible to prerender Blazor WebAssembly without a ASP.NET Core hosted model for search engines? This article will walkthrough on how to pre-render your Blazor site for SEO without hosting Blazor site as ASP.NET Core hosted model but pure static web app.

Note:

This post will show & explain solely based on my blog with Blazor WebAssembly .NET 5 and my biggest motivation is to achieve pre-rendering without an actual ASP.NET Core server hosted due to my hosted server is dedicated for static page app. Also, the post will not cover on leveraging this technique to improve loading performance which Blazor Server-side Pre-rendering is highly recommeded but it will target only for SEO purposes.

This post will show & explain solely based on my blog with Blazor WebAssembly .NET 5 and my biggest motivation is to achieve pre-rendering without an actual ASP.NET Core server hosted due to my hosted server is dedicated for static page app. Also, the post will not cover on leveraging this technique to improve loading performance which Blazor Server-side Pre-rendering is highly recommeded but it will target only for SEO purposes.

Problem

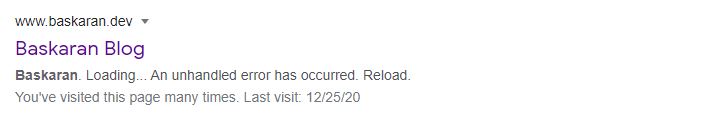

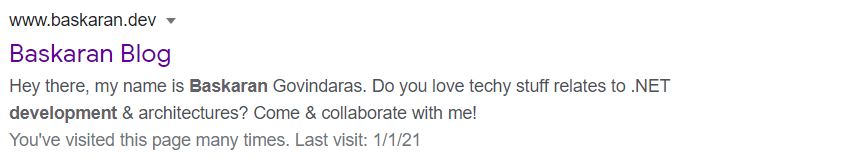

I've launched my Blazor WebAssembly .NET 5 blog around November 2020 with CloudFlare as my DNS management. As everybody else launching their first site live on internet, I began to lookout on Google search results on when my blog will be indexed. Two weeks later - my blog got indexed for the first time to the internet but it does not seem to show the results I wanted which as below:

I was expecting for the bot crawler to at least render the content of my

index.razor component page but it skipped the actual

content and only rendered what was in index.html file which the first text was 'Baskaran. Loading... An unhandled error has occurred. Reload'.

From there onwards, I thought let's capitalize on meta tags,

which to use description meta tag as below on each of my index.html + razor pages:

HTML

<meta name="description" content="your-text-description" />

Home page(Index.html)

It worked perfectly fine withindex.html which able to solve one of the main problems.

Razor component pages

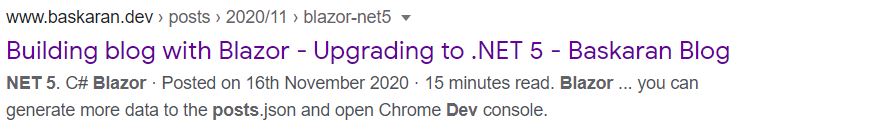

It was unsuccessful on myrazor pages which does not adhere my meta tags but still able to crawl my content of my site which

gave me relieve that Google can crawl through WebAssembly sites.

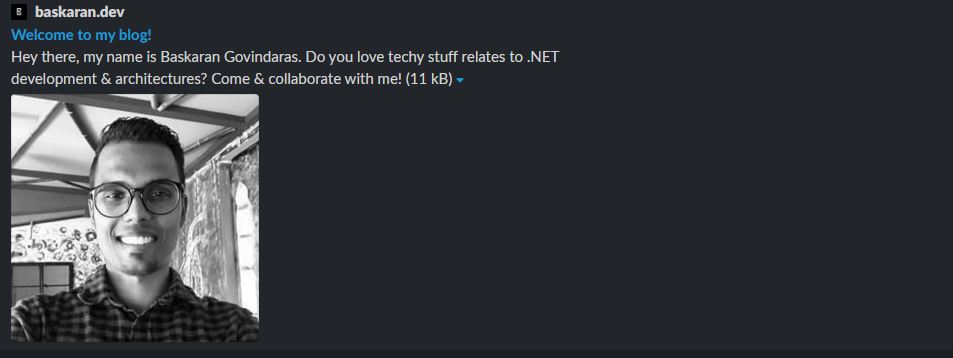

Slack crawler bots

Furthermore, I went on to test other bot crawlers(e.g. Slack bot) which results the same and can only preview links fromindex.html pages and not in other razor component pages.

Why can't search engines identify Blazor WebAssembly Razor page <head> element?

Let's get down to the basics of Blazor WebAssembly. The idea behind webassembly is that it provides flexibility for users

to choose the backend language(e.g. C#) and it downloads CLR & assemblies of .NET and compiles it under Javascript interop which

you can do a full-stack .NET development. However, the end outcome here is that it will be transformed to Javascript page which

is similar to Single page application(SPA) concept where on navigation it does not re-render the entire page from server and only renders

the specific component where requires new data.

To put everything together in our scenario, Blazor only returns

index.html and the necessary assemblies which is transformed to

Javascript interop. Only the HTML <body> element is changed on each navigation where the <head> element remains unchanged here

since the initial load on index.html. That explains on why metatags is not discoverable on razor component pages. Microsoft does

not explain in their documentation which users is warned before using Blazor WebAssembly which can be a big disadvantage for users that

leverage sites fully on client side & requires SEO.

Recently, Microsoft have launched Pre-rendering with Blazor on .NET 5. The solution requires ASP.NET Core in the deployed environment and which can solve all the issues I'm going to share as below if you have IIS/NGINX server. However, I only want to solve SEO in my case here and would prefer to have the similar client side experience with improved SEO which is what I'm going to share on a solution that does not require ASP.NET Core hosted in the deployed environment and you can still deploy your sites as static page app (e.g. Azure Static Web App or Netlify).

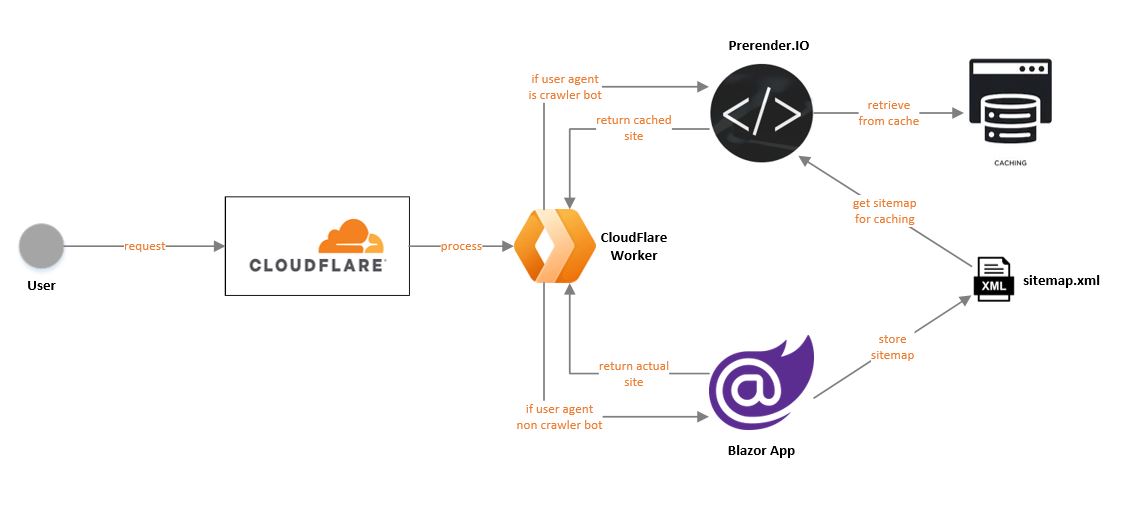

Solution architecture

We don't need a complicated setup to achieve this. All we need is two main components, CloudFlare Worker & Prerender.IO that does the magic of us! Process flow:

Process flow:

- An user request will flow through CloudFlare DNS.

- Each requested url will be dispatched to CloudFlare Worker which acts as a middleware.

- CloudFlare worker will indicate whether the request is from user/bot using

user-agentheader. - If

user-agentis from user, it will render from live Blazor site. - If

user-agentis from crawler bot, it will request Prerender.IO to retrieve the cached site with meta tags. - Prerender.IO will retrieve sites from caching and return back to CloudFlare worker.

- For each requested site, Prerender.IO has a copy urls from Blazor site

sitemap.xmland use to evaluate which site to be returned.

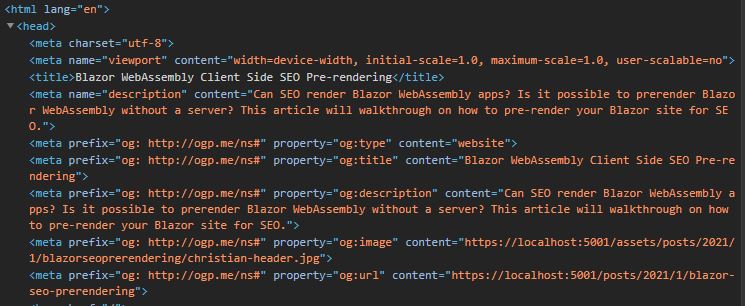

Blazor Head Updater Razor Component

Firstly, we will require a component that updates titles, metatags and links dynamically on each component navigation and can modify<head> element. I could have done it with simple Blazor Javascript injection but I bumped into the a Blazor community package

where it helps to add/update head element. The component Nuget package is called Toolbelt.Blazor.HeadElement.

The author have fully documented on how to use the package and it's pretty straightforward. For reference, you can check out my blog repository in

Github.

Once configured, on each navigation you can inspect page elements on whether the page

<head> element is updated as below:

SEO Meta tags benefits:

SEO Meta tags benefits:

- Meta Description - to show short summary of your page to crawler bots

- Meta Open graph - useful to render link previews to render in posts(e.g. Facebook, Twitter, LinkedIn, Slack, etc)

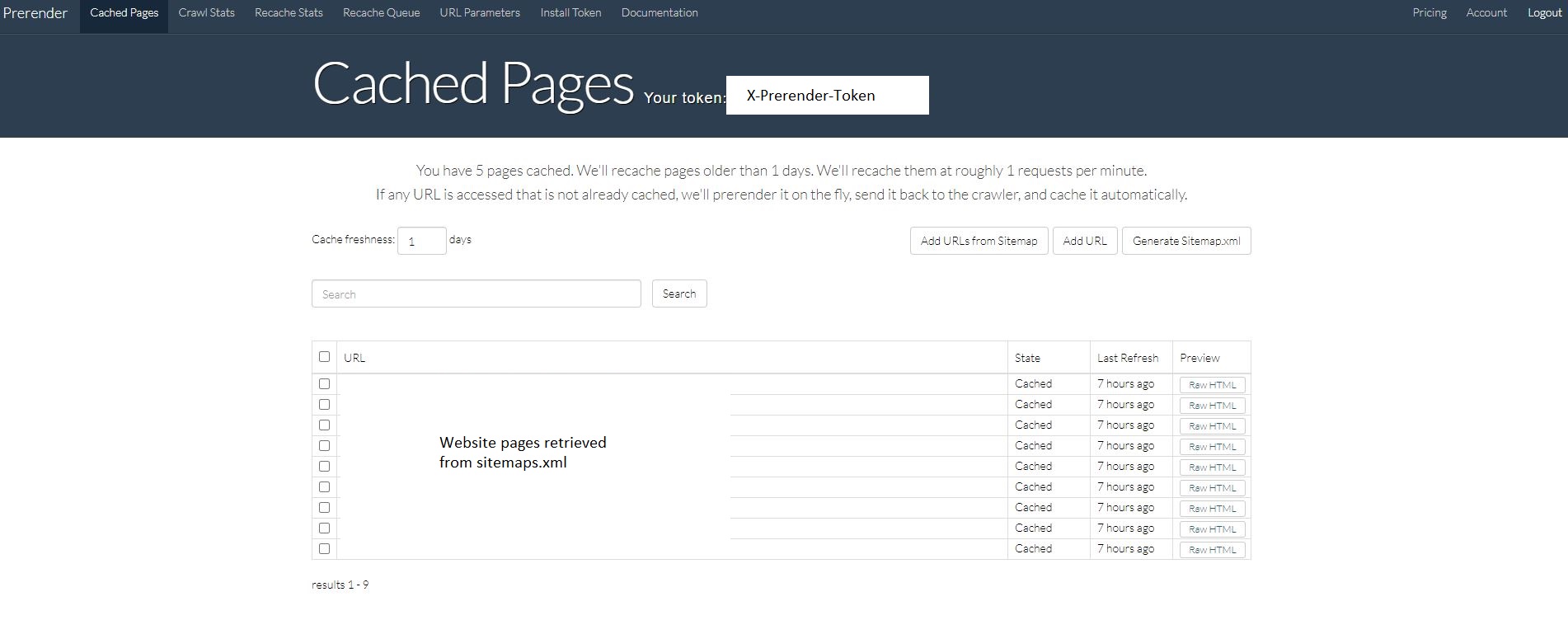

Sitemaps

In short - sitemaps are the blueprint of your site. Sitemaps is a file where it provides information about the pages on your site. Search engines like Google will readsitemap.xml to crawl your site. You can specify when the page was last updated,

how often the page is changed, and any alternate language versions of a page.

Google does recommends

sitemap.xml for large websites with lots of page links but for our use case, it's a MUST to have it because not only it

benefits Google search indexing but also it benefits sites like Prerender.IO (which will be discussed in next section) where it can read all the urls from your

sitemap.xml and cache it! Simply click Add URLs from Sitemap and it will load all the urls configured and cache it immediately.

Prerender.IO

Prerender.IO is a SaaS solution where it allows search engines to crawl seo-friendly version of single page applications(SPA). Search bots always tries to crawl your pages, but they only see index.html page which does not see the updated page components. Prerender.IO renders your subscribed page in a browser, saves it as static HTML that consist of Javascript updated<head> element and return

that to the crawlers!

Note:

This technique is recognized by Google which is called Dynamic Rendering and Googlebot doesn't consider dynamic rendering as cloaking. As long as dynamic rendering produced similar content, Googlebot won't view dynamic rendering as cloaking. In fact - Google does recognize common dynamic renderes like Prerender.IO right here.

This technique is recognized by Google which is called Dynamic Rendering and Googlebot doesn't consider dynamic rendering as cloaking. As long as dynamic rendering produced similar content, Googlebot won't view dynamic rendering as cloaking. In fact - Google does recognize common dynamic renderes like Prerender.IO right here.

Based on the solution architecture above, we need prerendering to take effect when a page is requested only by crawlers and not by user requests. So, the idea does not require a service with ASP.NET Core/Java hosted in the environment we host the static pages and can be crawled immediately and much faster!

However, we are not done yet. Prerender.IO comes with a limitation where the initial request has to go through a middleware to indicate whether its from user/bots based on user-agents. The suggested documentation guide requires a server to be hosted which it requires a middleware that does bot crawler detection before it decides to process the request. With that - you can proceed to the next section where the magic happens!

Warning:

Free Prerender.IO license can only be cached up to 200 pages. If you have a large site, you can consider using Startup license which monthly cost is about $9 USD with 30 days cache freshness.

Free Prerender.IO license can only be cached up to 200 pages. If you have a large site, you can consider using Startup license which monthly cost is about $9 USD with 30 days cache freshness.

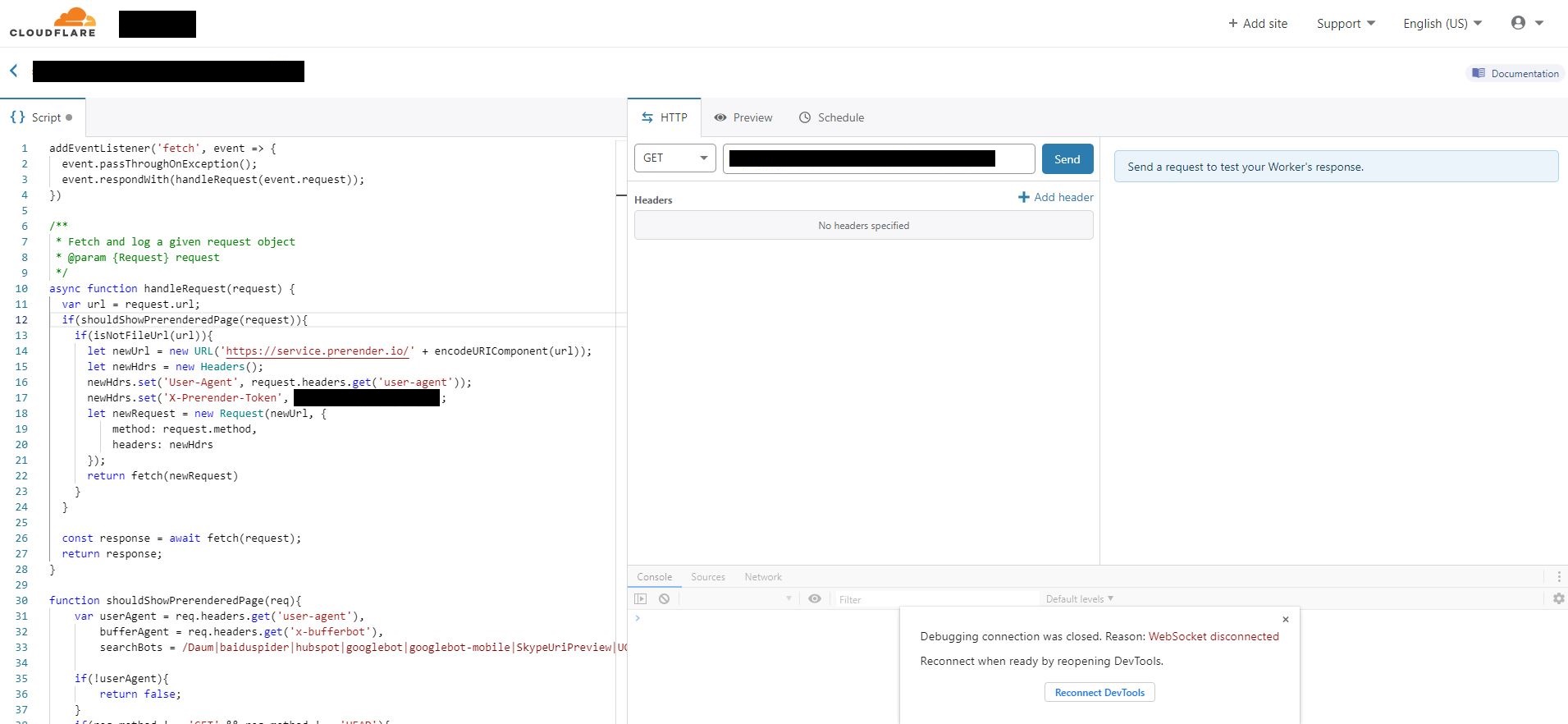

CloudFlare Workers

Here the magic happens! Cloudflare provides a service called Cloudflare Worker that is JavaScript based you write that runs on Cloudflare's edge which is a high performance V8 JavaScript engine. Basically, it can help to intercept each requests made to your site and deliver personalized user experiences. In most ideal use case scenarios, it can simplify A/B testing where we can test out site before enabling to live traffic.For our scenario, we need some sort of middleware that intercepts the requests to validate against crawled bots and dispatch requests to Prerender.IO. With that, we can leverage the ever-ready Workers feature in CloudFlare to intercept requests. In my previous post, I have covered on how to configure CloudFlare with your static website. Succeeding on that, CloudFlare has documented the getting started steps to get your first CloudFlare Worker right here.

Once you have configured that, you can use this code to detect crawled bots and user requests. You will NEED to copy & paste Bot Detector code directly to test it out which is right here. Before deploying it, you will need to replace your Prerender.IO token in

line 17 that is blanked out to get cached static content from Prerender.IO.

There are two criteria's which Bot Detector uses to detect if the request is from a crawled bot:

?_escaped_fragment_=- Google seems to already deprecated where they have stopped crawling with this query-stringuser-agent- Bot Detector only allows pre-rendering based on crawler bots as configured which a possible list on where your site can be crawled(e.g. Facebook, Twitter, LinkedIn, Slack, Whatsapp, etc)

Warning:

Free CloudFlare Workers have limits of 100,000 requests per day capacity. For more requests, you can upgrade to Workers Bundled plan for monthly plan of $5. It includes 10 million requests per month.

Free CloudFlare Workers have limits of 100,000 requests per day capacity. For more requests, you can upgrade to Workers Bundled plan for monthly plan of $5. It includes 10 million requests per month.

Put it to test!

Once you have configured the previous sections, to quickly test it rather than waiting for couple of days for crawling to happen, you can copy the links of razor component pages and test it out using Slack chat, Facebook status, Twitter tweets, etc.In my example, I've used Slack chat to verify if the links can be previewed with my configured meta tags and YES, it successfully crawled my site without the needing of actual server to be hosted for pre-rendering and delegates my request to Prerender.IO for crawler user agents.

In closing

With this solution architecture, you don't need ASP.NET Core hosted in the deployed environment where pre-rendering is taken care by Prerender.IO and managed by CloudFlare workers to detect bot crawlers. Now, you can successfully share your links in social media without being limited to onlyindex.html. I believe if Azure services like Azure Static Web App offers

such a middleware scenario, it can definitely be a game changer. If you have any questions in related to this solution,

you can reach out to me on my social media links in the footer links. Thanks!

Photo by

Photo by